Read the manual below or download the PDF.

SHARC: Surface Hopping in the Adiabatic Representation Including Arbitrary Couplings-Manual

Contents

1 Introduction

1.1 Capabilities

1.1.1 New features in SHARC Version 2.0

1.1.2 New features in SHARC Version 2.1

1.1.3 New features in SHARC Version 2.1.1

1.1.4 New features in SHARC Version 3.0

1.2 References

1.3 Authors

1.4 Suggestions and Bug Reports

1.5 Notation in this Manual

2 Installation

2.1 How To Obtain

2.2 Terms of Use

2.3 Installation

2.3.1 WFOVERLAP Program

2.3.2 Libraries

2.3.3 Test Suite

2.3.4 Additional Programs

2.3.5 Quantum Chemistry Programs

3 Execution

3.1 Running a single trajectory

3.1.1 Input files

3.1.2 Running the dynamics code

3.1.3 Output files

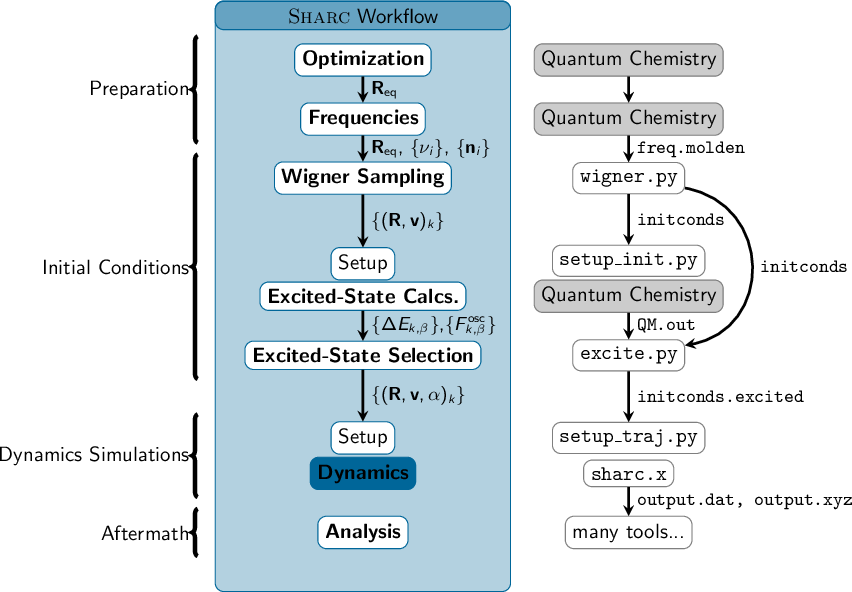

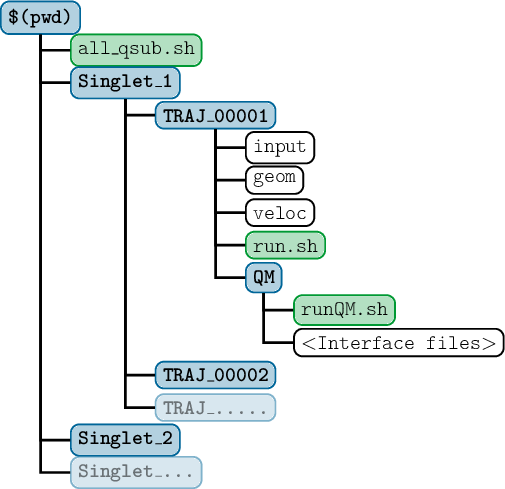

3.2 Typical workflow for an ensemble of trajectories

3.2.1 Initial condition generation

3.2.2 Running the dynamics simulations

3.2.3 Analysis of the dynamics results

3.3 Auxilliary Programs and Scripts

3.3.1 Setup

3.3.2 Analysis

3.3.3 Interfaces

3.3.4 Others

3.4 The PYSHARC dynamics driver

4 Input files

4.1 Main input file

4.1.1 General remarks

4.1.2 Input keywords

4.1.3 Detailed Description of the Keywords

4.1.4 Example

4.2 Geometry file

4.3 Velocity file

4.4 Coefficient file

4.5 Laser file

4.6 Atom mask file

4.7 RATTLE file

5 Output files

5.1 Log file

5.2 Listing file

5.3 Data file

5.3.1 Specification of the data file

5.4 Data file in NetCDF format

5.5 XYZ file

6 Interfaces

6.1 Interface Specifications

6.1.1 QM.in Specification

6.1.2 QM.out Specification

6.1.3 Further Specifications

6.1.4 Save Directory Specification

6.2 Overview over Interfaces

6.2.1 Example Directory

6.3 MOLPRO Interface

6.3.1 Template file: MOLPRO.template

6.3.2 Resource file: MOLPRO.resources

6.3.3 Error checking

6.3.4 Things to keep in mind

6.3.5 Molpro input generator: molpro_input.py

6.4 MOLCAS Interface

6.4.1 Template file: MOLCAS.template

6.4.2 Resource file: MOLCAS.resources

6.4.3 Template file generator: molcas_input.py

6.4.4 QM/MM key file: MOLCAS.qmmm.key

6.4.5 QM/MM connection table file: MOLCAS.qmmm.table

6.5 COLUMBUS Interface

6.5.1 Template input

6.5.2 Resource file: COLUMBUS.resources

6.5.3 Template setup

6.6 Analytical PESs Interface

6.6.1 Parametrization

6.6.2 Template file: Analytical.template

6.7 ADF Interface

6.7.1 Template file: ADF.template

6.7.2 Resource file: ADF.resources

6.7.3 QM/MM force field file: ADF.qmmm.ff

6.7.4 QM/MM connection table file: ADF.qmmm.table

6.7.5 Input file generator: ADF_input.py

6.7.6 Frequencies converter: ADF_freq.py

6.8 RICC2 Interface

6.8.1 Template file: RICC2.template

6.8.2 Resource file: RICC2.resources

6.8.3 QM/MM force field file

6.8.4 QM/MM connection table file: RICC2.qmmm.table

6.9 LVC Interface

6.9.1 Input files

6.9.2 Template File Setup: wigner.py, setup_LVCparam.py, and create_LVCparam.py

6.10 GAUSSIAN Interface

6.10.1 Template file: GAUSSIAN.template

6.10.2 Resource file: GAUSSIAN.resources

6.11 ORCA Interface

6.11.1 Template file: ORCA.template

6.11.2 Resource file: ORCA.resources

6.11.3 QM/MM force field file

6.11.4 QM/MM connection table file: ORCA.qmmm.table

6.12 BAGEL Interface

6.12.1 Template file: BAGEL.template

6.12.2 Resource file: BAGEL.resources

6.13 The WFOVERLAP Program

6.13.1 Installation

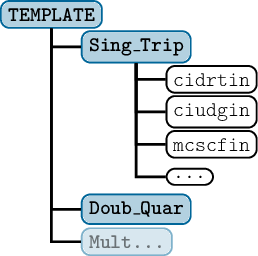

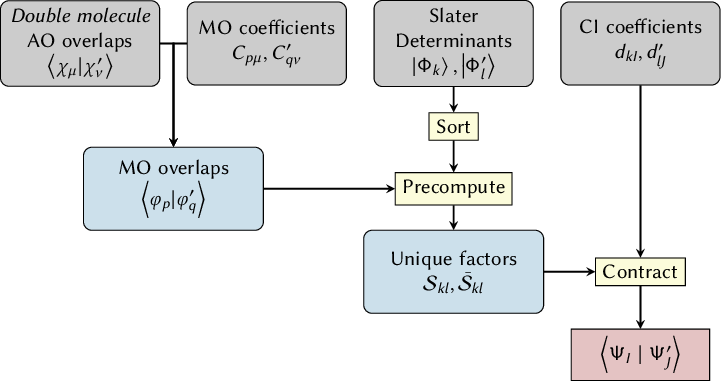

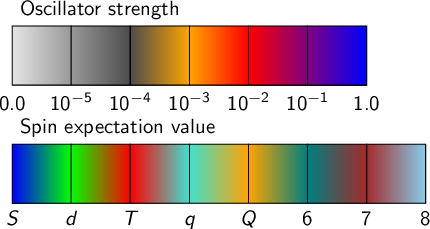

6.13.2 Workflow

6.13.3 Calling the program

6.13.4 Input data

6.13.5 Output

7 Auxilliary Scripts

7.1 Wigner Distribution Sampling: wigner.py

7.1.1 Usage

7.1.2 Normal mode types

7.1.3 Non-default masses

7.1.4 Sampling at finite temperatures

7.1.5 Output

7.2 Vibrational State Selected Sampling: state_selected.py

7.2.1 Usage

7.2.2 Major options

7.2.3 Normal mode types

7.2.4 Non-default masses

7.2.5 Output

7.3 AMBER Trajectory Sampling: amber_to_initconds.py

7.3.1 Usage

7.3.2 Time Step

7.3.3 Atom Types and Masses

7.3.4 Output

7.4 SHARC Trajectory Sampling: sharctraj_to_initconds.py

7.4.1 Usage

7.4.2 Random Picking of Time Step

7.4.3 Output

7.5 Setup of Initial Calculations: setup_init.py

7.5.1 Usage

7.5.2 Input

7.5.3 Interface-specific input

7.5.4 Input for Run Scripts

7.5.5 Output

7.6 Excitation Selection: excite.py

7.6.1 Usage

7.6.2 Input

7.6.3 Matrix diagonalization

7.6.4 Output

7.6.5 Specification of the initconds.excited file format

7.7 Setup of Trajectories: setup_traj.py

7.7.1 Input

7.7.2 Interface-specific input

7.7.3 Output control

7.7.4 Run script setup

7.7.5 Output

7.8 Laser field generation: laser.x

7.8.1 Usage

7.8.2 Input

7.9 Calculation of Absorption Spectra: spectrum.py

7.9.1 Input

7.9.2 Output

7.9.3 Error Analysis

7.10 File transfer: retrieve.sh

7.11 Data Extractor: data_extractor.x

7.11.1 Usage

7.11.2 Output

7.12 Data Extractor for NetCDF: data_extractor_NetCDF.x

7.12.1 Usage

7.12.2 Output

7.13 Data Converter for NetCDF: data_converter.x

7.13.1 Usage

7.13.2 Output

7.14 Plotting the Extracted Data: make_gnuscript.py

7.15 Ensemble Diagnostics Tool: diagnostics.py

7.15.1 Usage

7.15.2 Input

7.16 Calculation of Ensemble Populations: populations.py

7.16.1 Usage

7.16.2 Output

7.17 Calculation of Numbers of Hops: transition.py

7.17.1 Usage

7.18 Fitting population data to kinetic models: make_fit.py

7.18.1 Usage

7.18.2 Input

7.18.3 Output

7.19 Fitting population data to kinetic models: make_fitscript.py

7.19.1 Usage

7.19.2 Input

7.19.3 Output

7.20 Estimating Errors of Fits: bootstrap.py

7.20.1 Usage

7.20.2 Input

7.20.3 Output

7.21 Obtaining Special Geometries: crossing.py

7.21.1 Usage

7.21.2 Output

7.22 Internal Coordinates Analysis: geo.py

7.22.1 Input

7.22.2 Options

7.23 Essential Dynamics Analysis: trajana_essdyn.py

7.23.1 Usage

7.23.2 Input

7.23.3 Output

7.24 Normal Mode Analysis: trajana_nma.py

7.24.1 Usage

7.24.2 Input

7.24.3 Output

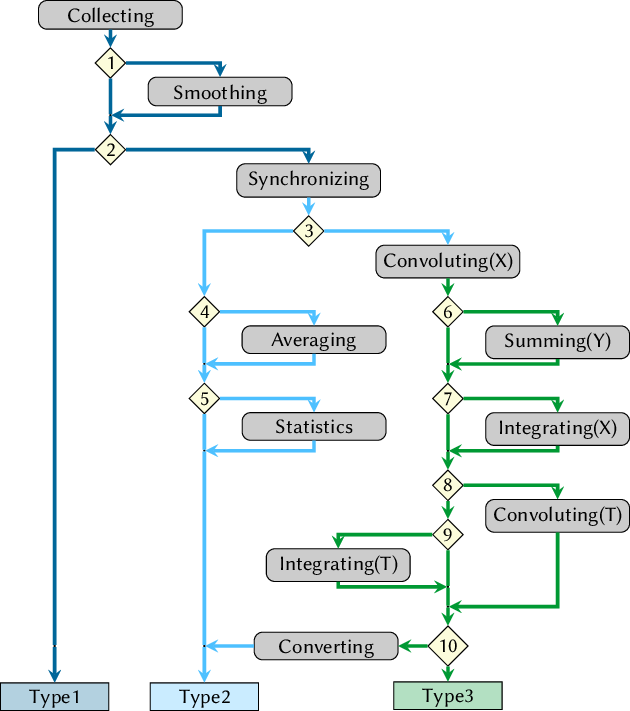

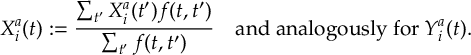

7.25 General Data Analysis: data_collector.py

7.25.1 Usage

7.25.2 Input

7.25.3 Output

7.26 Optimizations: orca_External and setup_orca_opt.py

7.26.1 Usage

7.26.2 Input

7.26.3 Output

7.26.4 Description of orca_External

7.27 Single Point Calculations: setup_single_point.py

7.27.1 Usage

7.27.2 Input

7.27.3 Output

7.28 Format Data from QM.out Files: QMout_print.py

7.28.1 Usage

7.28.2 Output

7.29 Diagonalization Helper: diagonalizer.x

8 Methods and Algorithms

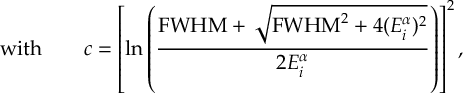

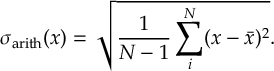

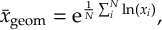

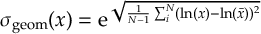

8.1 Absorption Spectrum

8.2 Active and inactive states

8.3 Amdahl’s Law

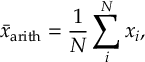

8.4 Bootstrapping for Population Fits

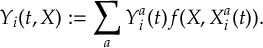

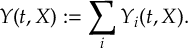

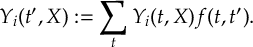

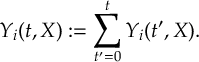

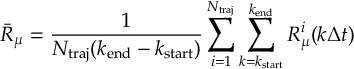

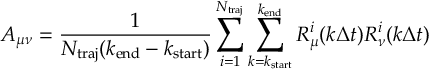

8.5 Computing electronic populations

8.6 Damping

8.7 Decoherence

8.7.1 Energy-based decoherence

8.7.2 Augmented FSSH decoherence

8.8 Essential Dynamics Analysis

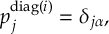

8.9 Excitation Selection

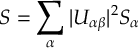

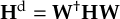

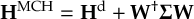

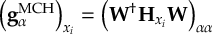

8.9.1 Excitation Selection with Diabatization

8.10 Global fits and kinetic models

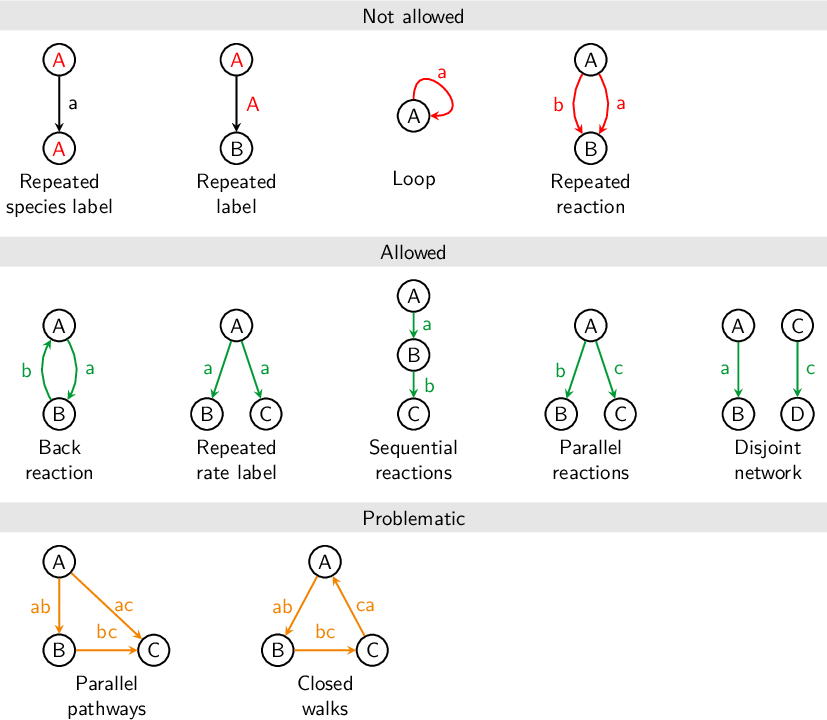

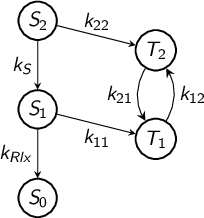

8.10.1 Reaction networks

8.10.2 Kinetic models

8.10.3 Global fit

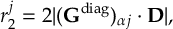

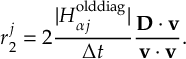

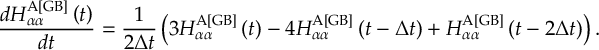

8.11 Gradient transformation

8.11.1 Nuclear gradient tensor transformation scheme

8.11.2 Time derivative matrix transformation scheme

8.11.3 Dipole moment derivatives

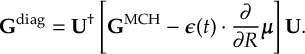

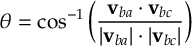

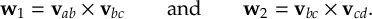

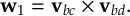

8.12 Internal coordinates definitions

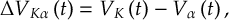

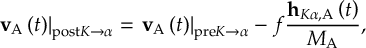

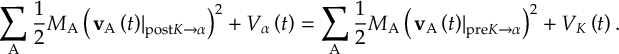

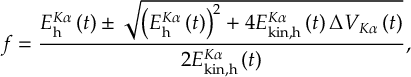

8.13 Kinetic energy adjustments

8.13.1 Reflection for frustrated hops

8.13.2 Choices of momentum adjustment direction

8.14 Projection operator

8.15 Fewest switches with time uncertainty

8.16 Laser fields

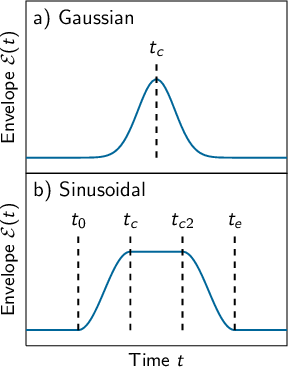

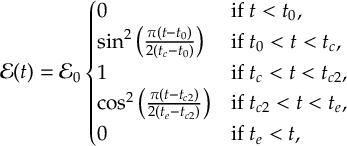

8.16.1 Form of the laser field

8.16.2 Envelope functions

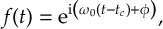

8.16.3 Field functions

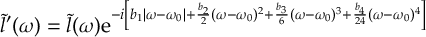

8.16.4 Chirped pulses

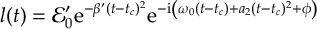

8.16.5 Quadratic chirp without Fourier transform

8.17 Laser interactions

8.17.1 Surface Hopping with laser fields

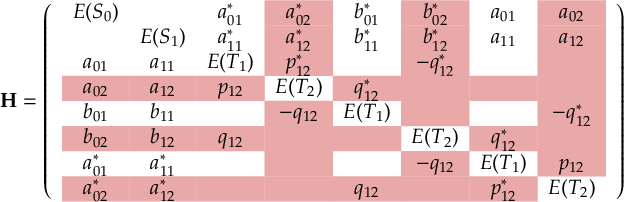

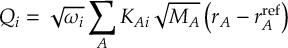

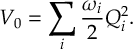

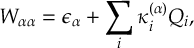

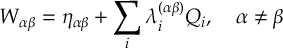

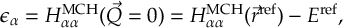

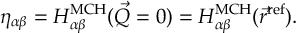

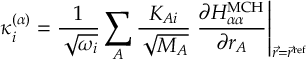

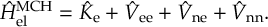

8.18 Linear Vibronic Coupling Models

8.18.1 Obtaining LVC parameters from ab initio data

8.19 Normal Mode Analysis

8.20 Optimization of Crossing Points

8.21 Phase tracking

8.21.1 Phase tracking of the transformation matrix

8.21.2 Tracking of the phase of the MCH wave functions

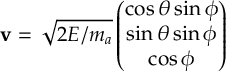

8.22 Random initial velocities

8.23 Representations

8.23.1 Current state in MCH representation

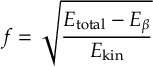

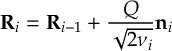

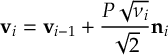

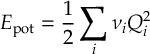

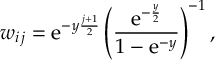

8.24 Sampling from Wigner Distribution

8.24.1 Sampling at Non-zero Temperature

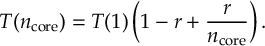

8.25 Scaling

8.26 Seeding of the RNG

8.27 Selection of gradients and nonadiabatic couplings

8.28 State ordering

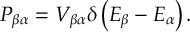

8.29 Surface Hopping

8.30 Self-Consistent Potential Methods

8.31 Effective Nonadiabatic Coupling Vector

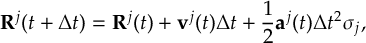

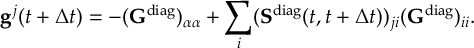

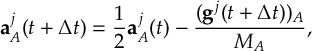

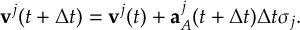

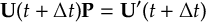

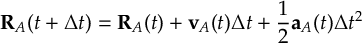

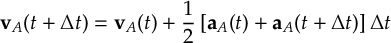

8.32 Velocity Verlet

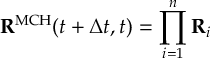

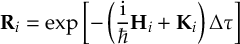

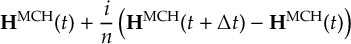

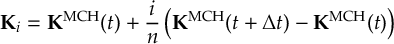

8.33 Wavefunction propagation

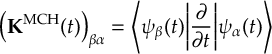

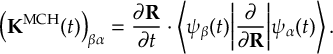

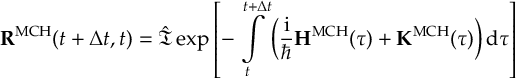

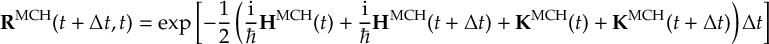

8.33.1 Propagation using nonadiabatic couplings

8.33.2 Propagation using overlap matrices – Local diabatization

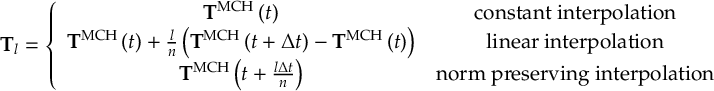

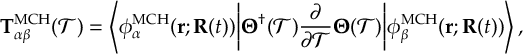

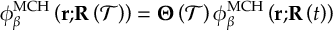

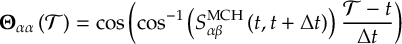

8.33.3 Propagation using overlap matrices – Norm-preserving interpolation

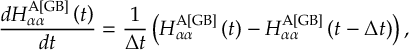

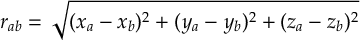

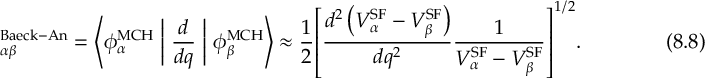

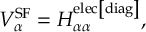

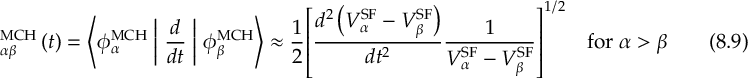

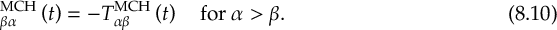

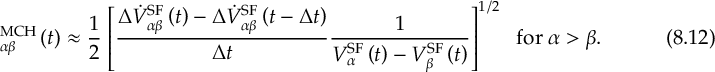

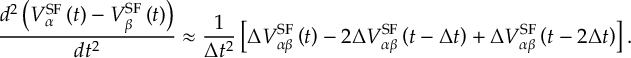

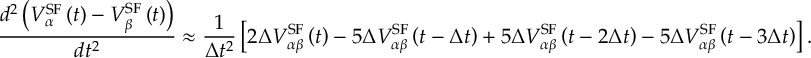

8.34 Evaluation of Time Derivative Couplings and Curvature Approximation

Chapter 1

Introduction

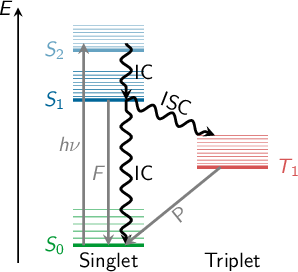

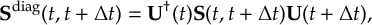

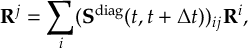

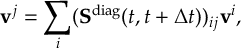

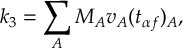

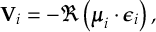

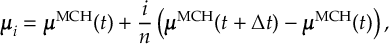

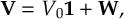

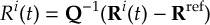

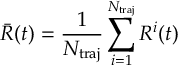

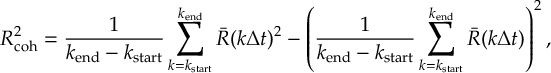

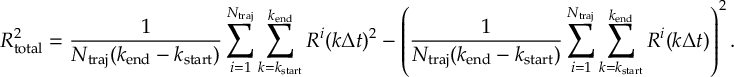

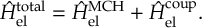

When a molecule is irradiated by light, a number of dynamical processes can take place, in which the molecule redistributes the energy among different electronic and vibrational degrees of freedom. Kasha’s rule [1] states that radiationless transfer from higher excited singlet states to the lowest-lying excited singlet state (S1) is faster than fluorescence (F). This radiationless transfer is called internal conversion (IC) and involves a changes between electronic states of the same multiplicity. If a transition occurs between electronic states of different spin, the process is called intersystem crossing (ISC). A typical ISC process is from a singlet to a triplet state, and once the lowest triplet is populated, phosphorescence (P) can take place. In figure 1.1, radiative (F and P) and radiationless (IC and ISC) processes are summarized in a so-called Jablonski diagram.

The non-radiative IC and ISC processes are fundamental concepts which play a decisive role in photochemistry and photobiology. IC processes are present in the excited-state dynamics of many organic and inorganic molecules, whose applications range from solar energy conversion to drug therapy. Even many, very small molecules, for example O2 and O3, SO2, NO2 and other nitrous oxides, show efficient IC, which has important consequences in atmospheric chemistry and the study of the environment and pollution. IC is also the first step of the biological process of visual perception, where the retinal moiety of rhodopsin absorbs a photon and non-radiatively performs a torsion around one of the double bonds, changing the conformation of the protein and inducing a neural signal. Similarly, protection of the human body from the influence of UV light is achieved through very efficient IC in DNA, proteins and melanins. Ultrafast IC to the electronic ground state allows quickly converting the excitation energy of the UV photons into nuclear kinetic energy, which is spread harmlessly as heat to the environment.

ISC processes are completely forbidden in the frame of the non-relativistic Schrödinger equation, but they become allowed when including spin-orbit couplings, a relativistic effect [2]. Spin-orbit coupling depends on the nuclear charge and becomes stronger for heavy atoms, therefore it is typically known as a “heavy atom” effect. However, it has been recently recognized that even for molecules with only first- and second-row atoms, ISC might be relevant and can be competitive in time scales with IC. A small selection of the growing number of molecules where efficient ISC in a sub-ps time scale has been predicted are SO2 [3,[4,[5], benzene [6], aromatic nitrocompounds [7] or DNA nucleobases and derivatives [8,[9,[10,[11,[12].

Theoretical simulations can greatly contribute to understand non-radiative processes by following the nuclear motion on the excited-state potential energy surfaces (PES) in real time. These simulations are called excited-state dynamics simulations.

Since the Born-Oppenheimer approximation is not applicable for this kind of dynamics, nonadiabatic effects need to be incorporated into the simulations.

The principal methodology to tackle excited-state dynamics simulations is to numerically integrate the time-dependent Schrödinger equation, which is usually called full quantum dynamics simulations (QD). Given accurate PESs, QD is able to match experimental accuracy. However, the need for the “a priori” knowledge of the full multi-dimensional PES renders this type of simulations quickly unfeasible for more than few degrees of freedom. Several alternative methodologies are possible to alleviate this problem. One of the most popular ones is to use surface hopping nonadiabatic dynamics.

Surface hopping was originally devised by Tully [13] and greatly improved later by the “fewest-switches criterion”[14] and it has been reviewed extensively since then, see e.g. [15,[16,[17,[18,[19].

In surface hopping, the motion of the excited-state wave packet is approximated by the motion of an ensemble of many independent, classical trajectories. Each trajectory is at every instant of time tied to one particular PES, and the nuclear motion is integrated using the gradient of this PES. However, nonadiabatic population transfer can lead to the switching of a trajectory from one PES to another PES. This switching (also called “hopping”, which is the origin of the name “surface hopping”) is based on a stochastic algorithm, taking into account the change of the electronic population from one time step to the next one.

The advantages of the surface hopping methodology and thus its popularity are well summarized in Ref. [15]:

- The method is conceptually simple, since it is based on classical mechanics. The nuclear propagation is based on Newton’s equations and can be performed in Cartesian coordinates, avoiding any problems with curved coordinate systems as in QD.

- For the propagation of the trajectories only local information of the PESs is needed. This avoids the calculation of the full, multi-dimensional PES in advance, which is the main bottleneck of QD methods. In surface hopping dynamics, all degrees of freedom can be included in the simulation. Additionally, all necessary quantities can be calculated on-demand, usually called “on-the-fly” in this context.

- The independent trajectories can be trivially parallelized.

The strongest of these points of course is the fact that all degrees of freedom can be included easily in the calculations, allowing to describe large systems.

One should note, however, that surface hopping methods in the standard formulation [13,[14]-due to the classical nature of the trajectories-do not allow to treat some purely quantum-mechanical effects like tunneling, (tunneling for selected degrees of freedom is possible [20]). Additionally, quantum coherence between the electronic states is usually described poorly, because of the independent-trajectory ansatz. This can be treated with some ad-hoc corrections, e.g., in [21].

In the original surface hopping method, only nonadiabatic couplings are considered, only allowing for population transfer between electronic states of the same multiplicity (IC).

The SHARC methodology is a generalization of standard surface hopping since it allows to include any type of coupling. Beyond nonadiabatic couplings (for IC), spin-orbit couplings (for ISC) or interactions of dipole moments with electric fields (to explicitly describe laser-induced processes) can be included.

A number of methodologies for surface hopping including one or the other type of potential couplings have been proposed in references [22,[23,[24,[25,[26,[27,[28], but SHARC can include all types of potential couplings on the same footing.

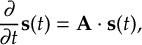

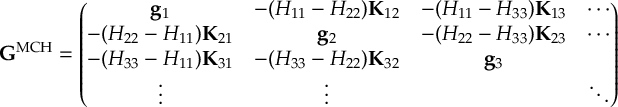

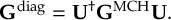

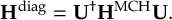

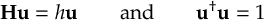

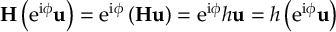

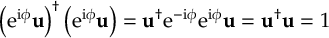

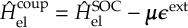

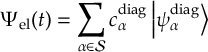

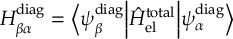

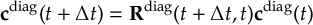

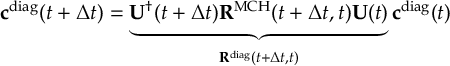

The SHARC methodology is an extension to standard surface hopping which allows to include these kinds of couplings. The central idea of SHARC is to obtain a fully diagonal Hamiltonian, which is adiabatic with respect to all couplings. The diagonal Hamiltonian is obtained by unitary transformation of the Hamiltonian including all couplings. Surface hopping is conducted on the transformed electronic states.

This has a number of advantages over the standard surface hopping methodology, where no diagonalization is performed:

- Potential couplings (like spin-orbit couplings and laser-dipole couplings) are usually delocalized. Surface hopping, however, rests on the assumption that the couplings are localized and hence surface hops only occur in the small region where the couplings are large. Within SHARC, by transforming away the potential couplings, additional terms of nonadiabatic (kinetic) couplings arise, which are localized.

- The potential couplings have an influence on the gradients acting on the nuclei. To a good approximation, within SHARC it is possible to include this influence in the dynamics.

- When including spin-orbit couplings for states of higher multiplicity, diagonalization solves the problem of rotational invariance of the multiplet components (see [26]).

The SHARC suite of programs is an implementation of the SHARC method. Besides the core dynamics code, it comes with a number of tools aiding in the setup, maintenance and analysis of the trajectories.

1.1 Capabilities

The main features of the SHARC suite are:

- Non-adiabatic dynamics based on the surface hopping methodology able to describe internal conversion and intersystem crossing with any number of states (singlets, doublets, triplets, or higher multiplicities).

- Algorithms for stable wave function propagation in the presence of very small or very large couplings.

- Inclusion of interactions with laser fields in the long-wavelength limit. The derivatives of the dipole moments can be included in strong-field applications.

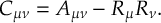

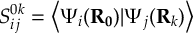

- Propagation using either nonadiabatic couplings vectors 〈α|[(∂)/(∂R)]|β〉 or wave function overlaps 〈α(t0)|β(t)〉 (via the local diabatization procedure [21]).

- Gradients including the effects of spin-orbit couplings (with the approximation that the diabatic spin-orbit couplings are slowly varying).

- Flexible interface to quantum chemistry programs. Existing interfaces to:

- MOLPRO 2010 and 2012: SA-CASSCF

- OPENMOLCAS 18.0: SA-CASSCF, SS-CASPT2, MS-CASPT2, SA-CASSCF+QM/MM

- COLUMBUS 7: SA-CASSCF, SA-RASSCF, MR-CISD

- ADF 2017+: TD-DFT, TD-DFT+QM/MM

- TURBOMOLE 7: ADC(2), CC2

- GAUSSIAN 09 and 16: TD-DFT

- ORCA 4.1: TD-DFT

- Interface for analytical potentials

- Interface for linear-vibronic coupling (LVC) models

- Energy-difference-based partial coupling approximation to speed up calculations [29].

- Energy-based decoherence correction [21] or augmented-FSSH decoherence correction [30].

- Calculation of Dyson norms for single-photon ionization spectra (for most interfaces) [31].

- On-the-fly wave function analysis with TheoDORE [32,[33,[34] (for some interfaces).

- Suite of auxiliary Python scripts for all steps of the setup procedure and for various analysis tasks.

- Comprehensive tutorial.

1.1.1 New features in SHARC Version 2.0

These features are new in SHARC Version 2.0 (2018):

- Dynamics program sharc.x:

- New methods: AFSSH for decoherence, GFSH for hopping probabilities, reflection after frustrated hop.

- Atom masking for size-extensive decoherence and rescaling.

- Improved wave function and U matrix phase tracking.

- Support for on-the-fly computation of Dyson norms and THEODORE descriptors.

- Option to gracefully stop trajectories after any time step.

- Fully integrated, efficient wave function overlap program wfoverlap.x

- Quantum chemistry interfaces:

- MOLPRO: overhauled, uses wfoverlap.x, gives consistent phase between CASSCF and CI wave functions, can do Dyson norms, parallelizes independent job parts.

- MOLCAS: overhauled, can do (MS)-CASPT2 (only numerical gradients), QM/MM, Cholesky decomposition, Dyson norms, parallelizes independent job parts, works with OPENMOLCAS version 18.

- COLUMBUS: overhauled, uses wfoverlap.x, can use DALTON integrals, can use MOLCAS orbitals, can do Dyson norms.

- ANALYTICAL: –

- TURBOMOLE: new interface, can do ADC(2) and CC2; has SOC (for ADC(2)), uses wfoverlap.x, works with THEODORE.

- ADF: new interface, can do TD-DFT; has SOC, uses wfoverlap.x, Dyson norms, has QM/MM, works with THEODORE.

- GAUSSIAN: new interface, can do TD-DFT; uses wfoverlap.x, has Dyson norms, works with THEODORE.

- LVC: new interface, can do (analytical) linear vibronic coupling models.

- Auxilliary scripts:

- wigner.py: elevated temperature sampling, LVC model setup.

- amber_to_initconds.py: new script, converts AMBER trajectories to SHARC initial conditions.

- sharctraj_to_initconds.py: new script, converts SHARC trajectories to SHARC initial conditions.

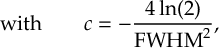

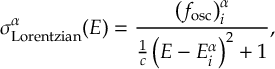

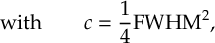

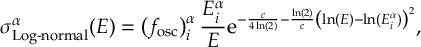

- spectrum.py: log-normal convolution, density of state spectra.

- data_extractor.x: new quantities to extract.

- diagnostics.py: new script, checks all trajectories prior to analysis.

- populations.py: new analysis modes.

- transition.py: new script, computes total number of hops in ensemble.

- make_fitscript.py: new script, prepares kinetic model fits to obtain time constants from populations.

- bootstrap.py: new script, calculates error estimates for time constants.

- trajana_essdyn.py: new script, performs essential dynamics analysis.

- trajana_nma.py: new script, performs normal mode analysis.

- data_collector.py: new script, performs generic data analysis (collecting, smoothing, convolution, integration).

- orca_External: new script, allows optimization with ORCA and SHARC.

- Several input generation helpers.

- Reworked tutorial using OPENMOLCAS (which is available at no cost).

1.1.2 New features in SHARC Version 2.1

These features are new in SHARC Version 2.1 (2019):

- Dynamics program sharc.x:

- New methods: Rescaling along gradient difference vector, forced hops to ground state.

- Output: Control of output step, writing to NetCDF format

- Dynamics program pysharc:

- Dynamics driver based on Python with C extension, allows to execute the routines of sharc.x directly within Python, which avoids file-based interface operations. Provides extremely efficient SHARC dynamics with LVC models.

- Data extraction:

- New options in data_extractor.x (e.g., populations according to [35]).

- New extractor for NetCDF files (data_extractor_NetCDF.x).

- Data converter (ASCII to NetCDF) (data_converter.x), useful for archiving.

- Quantum chemistry interfaces:

- MOLCAS: now works with OPENMOLCAS version 18 or MOLCAS 8.3 and 8.4.

- TURBOMOLE: now works with TURBOMOLE versions 7.1 to 7.4. Can now do QM/MM with TINKER.

- ORCA: new interface, can do TD-DFT; uses wfoverlap.x, has Dyson norms, works with THEODORE, can do QM/MM with TINKER.

- BAGEL: new interface, can do CASSCF, CASPT2, (X)MS-CASPT2 with analytical gradients and nonadiabatic couplings, has overlaps and Dyson norms from wfoverlap.x.

- ADF interface: updated for ADF2019 and newer, not backward compatible with older ADF versions.

- Auxilliary scripts:

- sharcvars.sh, sharcvars.csh: can be sourced to automatically set all relevant environment variables for sharc.x, pysharc, and programs using NetCDF.

- make_fit.py: new script, more flexible, efficient, and integrated script for kinetic model fitting. Replaces the scripts make_fitscript.py and bootstrap.py.

- setup_LVCparam.py and create_LVCparam.py: new setup scripts to automatically generate LVC models from quantum chemistry calculations (also without nonadiabatic coupling vectors).

1.1.3 New features in SHARC Version 2.1.1

These features are new in SHARC Version 2.1.1 (2021):

- Dynamics program sharc.x:

- RATTLE algorithm for constraint bond lengths

- Keyword for restart control

- Bug fixes

- SchNarc for machine learning properties is available at https://github.com/schnarc/SchNarc

1.1.4 New features in SHARC Version 3.0

These features are new in SHARC Version 3.0 (2023):

- Dynamics program sharc.x:

- CSDM: coherent switching with decay of mixing

- SCDM: self-consistent decay of mixing

- tSE: time-derivative semiclassical Ehrenfest

- tCSDM: time-derivative coherent switching with decay of mixing

- \upkappaSE: curvature-driven semiclassical Ehrenfest

- \upkappaCSDM: curvature-driven coherent switching with decay of mixing

- \upkappaTSH: curvature-driven trajectory surface hooping

- Time-derivative-matrix (TDM) gradient correction scheme

- TSH-FSTU: trajectory surface hopping with time uncertainty

- Projection operator that conserves angular momentum and center of mass motion

- New options for momentum adjustment vector and velocity reflection vector in TSH

- Adaptive time step velocity Verlet algorithm

- Bug fixes

- Interfaces and scripts *.py:

- Switch to Python 3

- State selected initial conditions: state_selected.py

- MOLCAS interface SHARC_MOLCAS.py:

- MS-PDFT:

- XMS-PDFT

- CMS-PDFT

1.2 References

The following references should be cited when using the SHARC suite:

Details can be found in the following references:

The theoretical background of SHARC is described in Refs. [38,[39,[40,[41,[42,[36,[43].

Applications of the SHARC code can be found in Refs. [9,[11,[44,[4,[45,[46,[47,[48,[49,[50,[51,[52,[53,[54,[55,[56,[57,[58,[59,[60,[61,[62,[63,[64,[65,[66,[67,[68,[69,[70].

Other features implemented in the SHARC suite are described in the following references:

- Energy-based decoherence correction: [21].

- Augmented-FSSH decoherence correction: [30].

- Global flux SH: [71].

- Local diabatization and wave function overlap calculation: [72,[73,[74].

- Sampling of initial conditions from a quantum-mechanical harmonic Wigner distribution: [75,[76,[77].

- Excited state selection for initial condition generation: [78].

- Calculation of ring puckering parameters and their classification: [79,[80].

- Normal mode analysis [81,[82] and essential dynamics analysis: [83,[82].

- Bootstrapping for error estimation: [84].

- Crossing point optimization: [85,[86]

- Computation of ionization spectra: [31,[87].

- Wave function comparison with overlaps: [88].

- Dynamics with linear vibronic coupling models: [89].

- Computation of electronic populations: [35].

- Dynamics with neural network potentials and other machine learning properties: [90]

- Coherent switching with decay of mixing: [91,[92]

- Time derivative algorithms tSE and tCSDM: [93]

- Curvature driven algorithms \upkappaSE, \upkappaTSH, and \upkappaCSDM: [94]

- Projection operator conserves angular momentum and center of mass motion: [95]

- Time-derivative-matrix gradient correction scheme: [96]

- Trajectory surface hopping with time uncertainty: [97,[98]

The quantum chemistry programs to which interfaces with SHARC exist are described in the following sources:

- MOLPRO: [99,[100],

- MOLCAS: [101,[102,[103,[104],

- COLUMBUS: [105,[106,[107,[108],

- ADF: [109],

- TURBOMOLE: [110],

- GAUSSIAN: [111,[112],

- ORCA: [113],

- BAGEL: [114].

Others:

1.3 Authors

The SHARC suite has been developed by

(listed alphabetically):

Andrew Atkins,

Davide Avagliano,

Sandra Gómez,

Leticia González,

Jesús González-Vázquez,

Moritz Heindl,

Lea M. Ibele,

Simon Kropf,

Sebastian Mai,

Philipp Marquetand,

Maximilian F. S. J. Menger,

Markus Oppel,

Felix Plasser,

Severin Polonius,

Martin Richter,

Matthias Ruckenbauer,

Yinan Shu,

Ignacio Sóla,

Donald G. Truhlar,

Linyao Zhang, and

Patrick Zobel.

1.4 Suggestions and Bug Reports

Bug reports and suggestions for possible features can be submitted to sharc@univie.ac.at.

1.5 Notation in this Manual

Names of programs

The SHARC suite consists of Fortran90 programs as well as Python and Shell scripts. The executable Fortran90 programs are denoted by the extension .x, the Python scripts have the extension .py and the Shell scripts .sh. Within this manual, all program names are given in bold monospaced font.

Chapter 2

Installation

2.1 How To Obtain

SHARC can be obtained from the SHARC homepage www.sharc-md.org. In the Download section, follow the link to GITHUB to clone or download the latest SHARC release version.

Note that you accept the Terms of Use given in the following section when you download SHARC.

2.2 Terms of Use

SHARC Program Suite

Copyright 2019, University of Vienna

SHARC is free software: you can redistribute it and/or modify

it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

SHARC is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

A copy of the GNU General Public License is given below.

It is also available at www.gnu.org/licenses/.

1. Preamble

The GNU General Public License is a free, copyleft license for

software and other kinds of works.

The licenses for most software and other practical works are designed

to take away your freedom to share and change the works. By contrast,

the GNU General Public License is intended to guarantee your freedom to

share and change all versions of a program-to make sure it remains free

software for all its users. We, the Free Software Foundation, use the

GNU General Public License for most of our software; it applies also to

any other work released this way by its authors. You can apply it to

your programs, too.

When we speak of free software, we are referring to freedom, not

price. Our General Public Licenses are designed to make sure that you

have the freedom to distribute copies of free software (and charge for

them if you wish), that you receive source code or can get it if you

want it, that you can change the software or use pieces of it in new

free programs, and that you know you can do these things.

To protect your rights, we need to prevent others from denying you

these rights or asking you to surrender the rights. Therefore, you have

certain responsibilities if you distribute copies of the software, or if

you modify it: responsibilities to respect the freedom of others.

For example, if you distribute copies of such a program, whether

gratis or for a fee, you must pass on to the recipients the same

freedoms that you received. You must make sure that they, too, receive

or can get the source code. And you must show them these terms so they

know their rights.

Developers that use the GNU GPL protect your rights with two steps:

(1) assert copyright on the software, and (2) offer you this License

giving you legal permission to copy, distribute and/or modify it.

For the developers’ and authors’ protection, the GPL clearly explains

that there is no warranty for this free software. For both users’ and

authors’ sake, the GPL requires that modified versions be marked as

changed, so that their problems will not be attributed erroneously to

authors of previous versions.

Some devices are designed to deny users access to install or run

modified versions of the software inside them, although the manufacturer

can do so. This is fundamentally incompatible with the aim of

protecting users’ freedom to change the software. The systematic

pattern of such abuse occurs in the area of products for individuals to

use, which is precisely where it is most unacceptable. Therefore, we

have designed this version of the GPL to prohibit the practice for those

products. If such problems arise substantially in other domains, we

stand ready to extend this provision to those domains in future versions

of the GPL, as needed to protect the freedom of users.

Finally, every program is threatened constantly by software patents.

States should not allow patents to restrict development and use of

software on general-purpose computers, but in those that do, we wish to

avoid the special danger that patents applied to a free program could

make it effectively proprietary. To prevent this, the GPL assures that

patents cannot be used to render the program non-free.

The precise terms and conditions for copying, distribution and

modification follow.

2. Terms and Conditions

- Definitions.

“This License” refers to version 3 of the GNU General Public License.

“Copyright” also means copyright-like laws that apply to other kinds of

works, such as semiconductor masks.“The Program” refers to any copyrightable work licensed under this

License. Each licensee is addressed as “you”. “Licensees” and

“recipients” may be individuals or organizations.To “modify” a work means to copy from or adapt all or part of the work

in a fashion requiring copyright permission, other than the making of an

exact copy. The resulting work is called a “modified version” of the

earlier work or a work “based on” the earlier work.A “covered work” means either the unmodified Program or a work based

on the Program.To “propagate” a work means to do anything with it that, without

permission, would make you directly or secondarily liable for

infringement under applicable copyright law, except executing it on a

computer or modifying a private copy. Propagation includes copying,

distribution (with or without modification), making available to the

public, and in some countries other activities as well.To “convey” a work means any kind of propagation that enables other

parties to make or receive copies. Mere interaction with a user through

a computer network, with no transfer of a copy, is not conveying.An interactive user interface displays “Appropriate Legal Notices”

to the extent that it includes a convenient and prominently visible

feature that (1) displays an appropriate copyright notice, and (2)

tells the user that there is no warranty for the work (except to the

extent that warranties are provided), that licensees may convey the

work under this License, and how to view a copy of this License. If

the interface presents a list of user commands or options, such as a

menu, a prominent item in the list meets this criterion. - Source Code.

The “source code” for a work means the preferred form of the work

for making modifications to it. “Object code” means any non-source

form of a work.A “Standard Interface” means an interface that either is an official

standard defined by a recognized standards body, or, in the case of

interfaces specified for a particular programming language, one that

is widely used among developers working in that language.The “System Libraries” of an executable work include anything, other

than the work as a whole, that (a) is included in the normal form of

packaging a Major Component, but which is not part of that Major

Component, and (b) serves only to enable use of the work with that

Major Component, or to implement a Standard Interface for which an

implementation is available to the public in source code form. A

“Major Component”, in this context, means a major essential component

(kernel, window system, and so on) of the specific operating system

(if any) on which the executable work runs, or a compiler used to

produce the work, or an object code interpreter used to run it.The “Corresponding Source” for a work in object code form means all

the source code needed to generate, install, and (for an executable

work) run the object code and to modify the work, including scripts to

control those activities. However, it does not include the work’s

System Libraries, or general-purpose tools or generally available free

programs which are used unmodified in performing those activities but

which are not part of the work. For example, Corresponding Source

includes interface definition files associated with source files for

the work, and the source code for shared libraries and dynamically

linked subprograms that the work is specifically designed to require,

such as by intimate data communication or control flow between those

subprograms and other parts of the work.The Corresponding Source need not include anything that users

can regenerate automatically from other parts of the Corresponding

Source.The Corresponding Source for a work in source code form is that

same work. - Basic Permissions.

All rights granted under this License are granted for the term of

copyright on the Program, and are irrevocable provided the stated

conditions are met. This License explicitly affirms your unlimited

permission to run the unmodified Program. The output from running a

covered work is covered by this License only if the output, given its

content, constitutes a covered work. This License acknowledges your

rights of fair use or other equivalent, as provided by copyright law.You may make, run and propagate covered works that you do not

convey, without conditions so long as your license otherwise remains

in force. You may convey covered works to others for the sole purpose

of having them make modifications exclusively for you, or provide you

with facilities for running those works, provided that you comply with

the terms of this License in conveying all material for which you do

not control copyright. Those thus making or running the covered works

for you must do so exclusively on your behalf, under your direction

and control, on terms that prohibit them from making any copies of

your copyrighted material outside their relationship with you.Conveying under any other circumstances is permitted solely under

the conditions stated below. Sublicensing is not allowed; section 10

makes it unnecessary. - Protecting Users’ Legal Rights From Anti-Circumvention Law.

No covered work shall be deemed part of an effective technological

measure under any applicable law fulfilling obligations under article

11 of the WIPO copyright treaty adopted on 20 December 1996, or

similar laws prohibiting or restricting circumvention of such

measures.When you convey a covered work, you waive any legal power to forbid

circumvention of technological measures to the extent such circumvention

is effected by exercising rights under this License with respect to

the covered work, and you disclaim any intention to limit operation or

modification of the work as a means of enforcing, against the work’s

users, your or third parties’ legal rights to forbid circumvention of

technological measures. - Conveying Verbatim Copies.

You may convey verbatim copies of the Program’s source code as you

receive it, in any medium, provided that you conspicuously and

appropriately publish on each copy an appropriate copyright notice;

keep intact all notices stating that this License and any

non-permissive terms added in accord with section 7 apply to the code;

keep intact all notices of the absence of any warranty; and give all

recipients a copy of this License along with the Program.You may charge any price or no price for each copy that you convey,

and you may offer support or warranty protection for a fee. - Conveying Modified Source Versions.

You may convey a work based on the Program, or the modifications to

produce it from the Program, in the form of source code under the

terms of section 4, provided that you also meet all of these conditions:- The work must carry prominent notices stating that you modified

it, and giving a relevant date. - The work must carry prominent notices stating that it is

released under this License and any conditions added under section

7. This requirement modifies the requirement in section 4 to

“keep intact all notices”. - You must license the entire work, as a whole, under this

License to anyone who comes into possession of a copy. This

License will therefore apply, along with any applicable section 7

additional terms, to the whole of the work, and all its parts,

regardless of how they are packaged. This License gives no

permission to license the work in any other way, but it does not

invalidate such permission if you have separately received it. - If the work has interactive user interfaces, each must display

Appropriate Legal Notices; however, if the Program has interactive

interfaces that do not display Appropriate Legal Notices, your

work need not make them do so.

A compilation of a covered work with other separate and independent

works, which are not by their nature extensions of the covered work,

and which are not combined with it such as to form a larger program,

in or on a volume of a storage or distribution medium, is called an

“aggregate” if the compilation and its resulting copyright are not

used to limit the access or legal rights of the compilation’s users

beyond what the individual works permit. Inclusion of a covered work

in an aggregate does not cause this License to apply to the other

parts of the aggregate. - The work must carry prominent notices stating that you modified

- Conveying Non-Source Forms.

You may convey a covered work in object code form under the terms

of sections 4 and 5, provided that you also convey the

machine-readable Corresponding Source under the terms of this License,

in one of these ways:- Convey the object code in, or embodied in, a physical product

(including a physical distribution medium), accompanied by the

Corresponding Source fixed on a durable physical medium

customarily used for software interchange. - Convey the object code in, or embodied in, a physical product

(including a physical distribution medium), accompanied by a

written offer, valid for at least three years and valid for as

long as you offer spare parts or customer support for that product

model, to give anyone who possesses the object code either (1) a

copy of the Corresponding Source for all the software in the

product that is covered by this License, on a durable physical

medium customarily used for software interchange, for a price no

more than your reasonable cost of physically performing this

conveying of source, or (2) access to copy the

Corresponding Source from a network server at no charge. - Convey individual copies of the object code with a copy of the

written offer to provide the Corresponding Source. This

alternative is allowed only occasionally and noncommercially, and

only if you received the object code with such an offer, in accord

with subsection 6b. - Convey the object code by offering access from a designated

place (gratis or for a charge), and offer equivalent access to the

Corresponding Source in the same way through the same place at no

further charge. You need not require recipients to copy the

Corresponding Source along with the object code. If the place to

copy the object code is a network server, the Corresponding Source

may be on a different server (operated by you or a third party)

that supports equivalent copying facilities, provided you maintain

clear directions next to the object code saying where to find the

Corresponding Source. Regardless of what server hosts the

Corresponding Source, you remain obligated to ensure that it is

available for as long as needed to satisfy these requirements. - Convey the object code using peer-to-peer transmission, provided

you inform other peers where the object code and Corresponding

Source of the work are being offered to the general public at no

charge under subsection 6d.

A separable portion of the object code, whose source code is excluded

from the Corresponding Source as a System Library, need not be

included in conveying the object code work.A “User Product” is either (1) a “consumer product”, which means any

tangible personal property which is normally used for personal, family,

or household purposes, or (2) anything designed or sold for incorporation

into a dwelling. In determining whether a product is a consumer product,

doubtful cases shall be resolved in favor of coverage. For a particular

product received by a particular user, “normally used” refers to a

typical or common use of that class of product, regardless of the status

of the particular user or of the way in which the particular user

actually uses, or expects or is expected to use, the product. A product

is a consumer product regardless of whether the product has substantial

commercial, industrial or non-consumer uses, unless such uses represent

the only significant mode of use of the product.“Installation Information” for a User Product means any methods,

procedures, authorization keys, or other information required to install

and execute modified versions of a covered work in that User Product from

a modified version of its Corresponding Source. The information must

suffice to ensure that the continued functioning of the modified object

code is in no case prevented or interfered with solely because

modification has been made.If you convey an object code work under this section in, or with, or

specifically for use in, a User Product, and the conveying occurs as

part of a transaction in which the right of possession and use of the

User Product is transferred to the recipient in perpetuity or for a

fixed term (regardless of how the transaction is characterized), the

Corresponding Source conveyed under this section must be accompanied

by the Installation Information. But this requirement does not apply

if neither you nor any third party retains the ability to install

modified object code on the User Product (for example, the work has

been installed in ROM).The requirement to provide Installation Information does not include a

requirement to continue to provide support service, warranty, or updates

for a work that has been modified or installed by the recipient, or for

the User Product in which it has been modified or installed. Access to a

network may be denied when the modification itself materially and

adversely affects the operation of the network or violates the rules and

protocols for communication across the network.Corresponding Source conveyed, and Installation Information provided,

in accord with this section must be in a format that is publicly

documented (and with an implementation available to the public in

source code form), and must require no special password or key for

unpacking, reading or copying. - Convey the object code in, or embodied in, a physical product

- Additional Terms.

“Additional permissions” are terms that supplement the terms of this

License by making exceptions from one or more of its conditions.

Additional permissions that are applicable to the entire Program shall

be treated as though they were included in this License, to the extent

that they are valid under applicable law. If additional permissions

apply only to part of the Program, that part may be used separately

under those permissions, but the entire Program remains governed by

this License without regard to the additional permissions.When you convey a copy of a covered work, you may at your option

remove any additional permissions from that copy, or from any part of

it. (Additional permissions may be written to require their own

removal in certain cases when you modify the work.) You may place

additional permissions on material, added by you to a covered work,

for which you have or can give appropriate copyright permission.Notwithstanding any other provision of this License, for material you

add to a covered work, you may (if authorized by the copyright holders of

that material) supplement the terms of this License with terms:- Disclaiming warranty or limiting liability differently from the

terms of sections 15 and 16 of this License; or - Requiring preservation of specified reasonable legal notices or

author attributions in that material or in the Appropriate Legal

Notices displayed by works containing it; or - Prohibiting misrepresentation of the origin of that material, or

requiring that modified versions of such material be marked in

reasonable ways as different from the original version; or - Limiting the use for publicity purposes of names of licensors or

authors of the material; or - Declining to grant rights under trademark law for use of some

trade names, trademarks, or service marks; or - Requiring indemnification of licensors and authors of that

material by anyone who conveys the material (or modified versions of

it) with contractual assumptions of liability to the recipient, for

any liability that these contractual assumptions directly impose on

those licensors and authors.

All other non-permissive additional terms are considered “further

restrictions” within the meaning of section 10. If the Program as you

received it, or any part of it, contains a notice stating that it is

governed by this License along with a term that is a further

restriction, you may remove that term. If a license document contains

a further restriction but permits relicensing or conveying under this

License, you may add to a covered work material governed by the terms

of that license document, provided that the further restriction does

not survive such relicensing or conveying.If you add terms to a covered work in accord with this section, you

must place, in the relevant source files, a statement of the

additional terms that apply to those files, or a notice indicating

where to find the applicable terms.Additional terms, permissive or non-permissive, may be stated in the

form of a separately written license, or stated as exceptions;

the above requirements apply either way. - Disclaiming warranty or limiting liability differently from the

- Termination.

You may not propagate or modify a covered work except as expressly

provided under this License. Any attempt otherwise to propagate or

modify it is void, and will automatically terminate your rights under

this License (including any patent licenses granted under the third

paragraph of section 11).However, if you cease all violation of this License, then your

license from a particular copyright holder is reinstated (a)

provisionally, unless and until the copyright holder explicitly and

finally terminates your license, and (b) permanently, if the copyright

holder fails to notify you of the violation by some reasonable means

prior to 60 days after the cessation.Moreover, your license from a particular copyright holder is

reinstated permanently if the copyright holder notifies you of the

violation by some reasonable means, this is the first time you have

received notice of violation of this License (for any work) from that

copyright holder, and you cure the violation prior to 30 days after

your receipt of the notice.Termination of your rights under this section does not terminate the

licenses of parties who have received copies or rights from you under

this License. If your rights have been terminated and not permanently

reinstated, you do not qualify to receive new licenses for the same

material under section 10. - Acceptance Not Required for Having Copies.

You are not required to accept this License in order to receive or

run a copy of the Program. Ancillary propagation of a covered work

occurring solely as a consequence of using peer-to-peer transmission

to receive a copy likewise does not require acceptance. However,

nothing other than this License grants you permission to propagate or

modify any covered work. These actions infringe copyright if you do

not accept this License. Therefore, by modifying or propagating a

covered work, you indicate your acceptance of this License to do so. - Automatic Licensing of Downstream Recipients.

Each time you convey a covered work, the recipient automatically

receives a license from the original licensors, to run, modify and

propagate that work, subject to this License. You are not responsible

for enforcing compliance by third parties with this License.An “entity transaction” is a transaction transferring control of an

organization, or substantially all assets of one, or subdividing an

organization, or merging organizations. If propagation of a covered

work results from an entity transaction, each party to that

transaction who receives a copy of the work also receives whatever

licenses to the work the party’s predecessor in interest had or could

give under the previous paragraph, plus a right to possession of the

Corresponding Source of the work from the predecessor in interest, if

the predecessor has it or can get it with reasonable efforts.You may not impose any further restrictions on the exercise of the

rights granted or affirmed under this License. For example, you may

not impose a license fee, royalty, or other charge for exercise of

rights granted under this License, and you may not initiate litigation

(including a cross-claim or counterclaim in a lawsuit) alleging that

any patent claim is infringed by making, using, selling, offering for

sale, or importing the Program or any portion of it. - Patents.

A “contributor” is a copyright holder who authorizes use under this

License of the Program or a work on which the Program is based. The

work thus licensed is called the contributor’s “contributor version”.A contributor’s “essential patent claims” are all patent claims

owned or controlled by the contributor, whether already acquired or

hereafter acquired, that would be infringed by some manner, permitted

by this License, of making, using, or selling its contributor version,

but do not include claims that would be infringed only as a

consequence of further modification of the contributor version. For

purposes of this definition, “control” includes the right to grant

patent sublicenses in a manner consistent with the requirements of

this License.Each contributor grants you a non-exclusive, worldwide, royalty-free

patent license under the contributor’s essential patent claims, to

make, use, sell, offer for sale, import and otherwise run, modify and

propagate the contents of its contributor version.In the following three paragraphs, a “patent license” is any express

agreement or commitment, however denominated, not to enforce a patent

(such as an express permission to practice a patent or covenant not to

sue for patent infringement). To “grant” such a patent license to a

party means to make such an agreement or commitment not to enforce a

patent against the party.If you convey a covered work, knowingly relying on a patent license,

and the Corresponding Source of the work is not available for anyone

to copy, free of charge and under the terms of this License, through a

publicly available network server or other readily accessible means,

then you must either (1) cause the Corresponding Source to be so

available, or (2) arrange to deprive yourself of the benefit of the

patent license for this particular work, or (3) arrange, in a manner

consistent with the requirements of this License, to extend the patent

license to downstream recipients. “Knowingly relying” means you have

actual knowledge that, but for the patent license, your conveying the

covered work in a country, or your recipient’s use of the covered work

in a country, would infringe one or more identifiable patents in that

country that you have reason to believe are valid.If, pursuant to or in connection with a single transaction or

arrangement, you convey, or propagate by procuring conveyance of, a

covered work, and grant a patent license to some of the parties

receiving the covered work authorizing them to use, propagate, modify

or convey a specific copy of the covered work, then the patent license

you grant is automatically extended to all recipients of the covered

work and works based on it.A patent license is “discriminatory” if it does not include within

the scope of its coverage, prohibits the exercise of, or is

conditioned on the non-exercise of one or more of the rights that are

specifically granted under this License. You may not convey a covered

work if you are a party to an arrangement with a third party that is

in the business of distributing software, under which you make payment

to the third party based on the extent of your activity of conveying

the work, and under which the third party grants, to any of the

parties who would receive the covered work from you, a discriminatory

patent license (a) in connection with copies of the covered work

conveyed by you (or copies made from those copies), or (b) primarily

for and in connection with specific products or compilations that

contain the covered work, unless you entered into that arrangement,

or that patent license was granted, prior to 28 March 2007.Nothing in this License shall be construed as excluding or limiting

any implied license or other defenses to infringement that may

otherwise be available to you under applicable patent law. - No Surrender of Others’ Freedom.

If conditions are imposed on you (whether by court order, agreement or

otherwise) that contradict the conditions of this License, they do not

excuse you from the conditions of this License. If you cannot convey a

covered work so as to satisfy simultaneously your obligations under this

License and any other pertinent obligations, then as a consequence you may

not convey it at all. For example, if you agree to terms that obligate you

to collect a royalty for further conveying from those to whom you convey

the Program, the only way you could satisfy both those terms and this

License would be to refrain entirely from conveying the Program. - Use with the GNU Affero General Public License.

Notwithstanding any other provision of this License, you have

permission to link or combine any covered work with a work licensed

under version 3 of the GNU Affero General Public License into a single

combined work, and to convey the resulting work. The terms of this

License will continue to apply to the part which is the covered work,

but the special requirements of the GNU Affero General Public License,

section 13, concerning interaction through a network will apply to the

combination as such. - Revised Versions of this License.

The Free Software Foundation may publish revised and/or new versions of

the GNU General Public License from time to time. Such new versions will

be similar in spirit to the present version, but may differ in detail to

address new problems or concerns.Each version is given a distinguishing version number. If the

Program specifies that a certain numbered version of the GNU General

Public License “or any later version” applies to it, you have the

option of following the terms and conditions either of that numbered

version or of any later version published by the Free Software

Foundation. If the Program does not specify a version number of the

GNU General Public License, you may choose any version ever published

by the Free Software Foundation.If the Program specifies that a proxy can decide which future

versions of the GNU General Public License can be used, that proxy’s

public statement of acceptance of a version permanently authorizes you

to choose that version for the Program.Later license versions may give you additional or different

permissions. However, no additional obligations are imposed on any

author or copyright holder as a result of your choosing to follow a

later version. - Disclaimer of Warranty.

THERE IS NO WARRANTY FOR THE PROGRAM, TO THE EXTENT PERMITTED BY

APPLICABLE LAW. EXCEPT WHEN OTHERWISE STATED IN WRITING THE

COPYRIGHT HOLDERS AND/OR OTHER PARTIES PROVIDE THE PROGRAM “AS IS”

WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESSED OR IMPLIED,

INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF

MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE ENTIRE

RISK AS TO THE QUALITY AND PERFORMANCE OF THE PROGRAM IS WITH YOU.

SHOULD THE PROGRAM PROVE DEFECTIVE, YOU ASSUME THE COST OF ALL

NECESSARY SERVICING, REPAIR OR CORRECTION. - Limitation of Liability.

IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW OR AGREED TO IN

WRITING WILL ANY COPYRIGHT HOLDER, OR ANY OTHER PARTY WHO MODIFIES

AND/OR CONVEYS THE PROGRAM AS PERMITTED ABOVE, BE LIABLE TO YOU FOR

DAMAGES, INCLUDING ANY GENERAL, SPECIAL, INCIDENTAL OR CONSEQUENTIAL

DAMAGES ARISING OUT OF THE USE OR INABILITY TO USE THE PROGRAM

(INCLUDING BUT NOT LIMITED TO LOSS OF DATA OR DATA BEING RENDERED

INACCURATE OR LOSSES SUSTAINED BY YOU OR THIRD PARTIES OR A FAILURE

OF THE PROGRAM TO OPERATE WITH ANY OTHER PROGRAMS), EVEN IF SUCH

HOLDER OR OTHER PARTY HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH

DAMAGES. - Interpretation of Sections 15 and 16.

If the disclaimer of warranty and limitation of liability provided

above cannot be given local legal effect according to their terms,

reviewing courts shall apply local law that most closely approximates

an absolute waiver of all civil liability in connection with the

Program, unless a warranty or assumption of liability accompanies a

copy of the Program in return for a fee.

2.3 Installation

In order to install and run SHARC under Linux (Windows and OS X are currently not supported), you need the following:

- A Fortran90 compiler (This release is tested against GNU Fortran 8.5.0 and Intel Fortran 2021.8.0).

- The BLAS, LAPACK and FFTW3 libraries.

- Python 3 (This release is tested against Python 3.10).

- make.

- git.

- [Anaconda with several libraries (hdf5, hdf5_hl, netcdf, mfhdf, df, jpeg, gcc) for the pysharc and NetCDF functionalities.]

Extracting

The source code of the SHARC suite is distributed via github. In order to install it, first clone the repository in a suitable directory (where a directory called sharc will be automatically created):

git clone https://github.com/sharc-md/sharc.git

As mentioned above, this should create a new directory called sharc/ which contains all the necessary subdirectories and files.

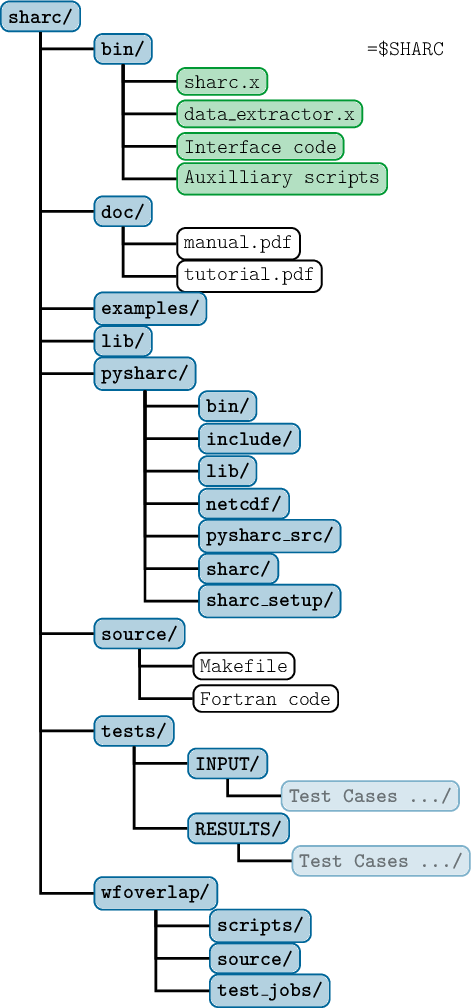

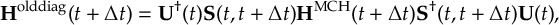

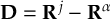

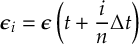

In figure 2.1 the directory structure of the complete SHARC directory is shown.

Compiling and installing (without pysharc)

To compile the Fortran90 programs of the SHARC suite, go to the source/ directory.

cd source/

and edit the Makefile by adjusting the variables in the top part.

If you only want to install regular SHARC (no pysharc or NetCDF), set USE_PYSHARC to false.

Then, inside source/ issuing the command:

make

will compile the source and create all the binaries.

make install

will additionally copy the binary files into the sharc/bin/ directory of the

SHARC distribution, which already contains all the python scripts which

come with SHARC.

Compiling and installing (with pysharc)

If you intend to perform computations with pysharc (with LVC models) or with NetCDF functionality, you need to set USE_PYSHARC to true in source/Makefile.

Note that currently, some new options (adaptive time steps, time uncertainty hopping, decay of mixing decoherence, and Ehrenfest dynamics).

In order to compile with pysharc, it is necessary to first create a suitable Python installation through the Anaconda distribution.

A working minimal environment can be created with:

conda create -n pysharc_3.0 -c conda-forge python=3.9 numpy scipy h5py six matplotlib

python-dateutil pyyaml pyparsing kiwisolver cycler netcdf4 hdf5 h5utils gfortran_linux-64

Subsequently, the environment has to be activated with

conda activate pysharc_3.0

before one can proceed with the installation of pysharc.

Due to a number of dependencies, compiling with pysharc is slightly more complicated than without.

The simplest way to compile both pysharc and the regular executables together is to

- run make install in pysharc/, then

- run make install in source/.

Alternatively, you might want to compile the regular executables without pysharc or NetCDF, and then compile pysharc.

To do this:

- set USE_PYSHARC to false,

- run make install in source/,

- run make clean in source/,

- set USE_PYSHARC to true,

- run make install in pysharc/.

Environment setup

In order to use the SHARC suite, you have to set some environment variables. The recommended approach is to source either of the sharcvars files (generated by make) that are located in the bin/ directory. For example, if you have cloned SHARC into your home directory, just use:

source ~/sharc/bin/sharcvars.sh (for bourne shell users)

or

source ~/sharc/bin/sharcvars.csh (for c-shell type users)

Note that it may be convenient to put this line into your shell’s login

scripts.

The afore-mentioned procedure works from SHARC 2.1 on and also for later versions. For older versions, set the environment variable

$SHARC to the bin/ directory of the SHARC installation.

This ensures that all programs of the SHARC suite find the other executables and all calls are successful.

For example, if you have unpacked SHARC into your home directory, just set:

export SHARC=~/sharc/bin (for bourne shell users)

or

setenv SHARC $HOME/sharc/bin (for c-shell type users)

2.3.1 WFOVERLAP Program

The SHARC package contains as a submodule the program WFOVERLAP, which is necessary for many functionalities of SHARC.

In order to install and test this program, see section 6.13.

2.3.2 Libraries

SHARC requires the BLAS, LAPACK and FFTW3 libraries. During the installation, it might be necessary to alter the LDFLAGS string in the Makefile, depending on where the relevant libraries are located on your system. In this way, it is for example possible to use vendor-provided libraries like the Intel MKL. For more details see the INSTALL file which is included in the SHARC distribution.

As specified above, users of pysharc and the NetCDF functions will need additional libraries, e.g., obtained through ANACONDA:

conda create -n pysharc_3.0 -c conda-forge python=3.9 numpy scipy h5py six matplotlib

python-dateutil pyyaml pyparsing kiwisolver cycler netcdf4 hdf5 h5utils gfortran_linux-64

2.3.3 Test Suite

| Keyword | Description |

| $ADF | Points to the main directory of the ADF installation, which contains the file adfrc.sh and subdirectory bin/. |

| $BAGEL | Points to the main directory of the BAGEL installation, which contains subdirectories bin/ and lib/. |

| $COLUMBUS | Points to the directory containing the COLUMBUS executables, e.g., runls. |

| $GAUSSIAN | Points to the main directory of the GAUSSIAN installation, which contains the GAUSSIAN executables (e.g., g09/g16 or l9999.exe). |

| $MOLCAS | Points to the main directory of the OPENMOLCAS installation, containing molcas.rte and directories basis_library/ and bin/. |

| $MOLPRO | Points to the bin/ directory of the MOLPRO installation, which contains the molpro.exe file. |

| $TURBOMOLE | Points to the main directory of the TURBOMOLE installation, which contains subdirectories like basen/, bin/, or scripts/. |

| $ORCA | Points to the directory containing the ORCA executables, e.g., orca, orca_gtoint, or orca_fragovl. |

| $THEODORE | Points to the main directory of the THEODORE installation. $THEODORE/bin/ should contain analyze_tden.py. |

| $TINKER | Points to the main directory of a MOLCAS-modified TINKER installation. $TINKER/bin should contain tkr2qm_s). |

| $PYQUANTE | Points to the main directory of PYQUANTE, containing the PyQuante/ subdirectory that can be imported by Python as a module. |

| $molcas | Should point to the same location as $MOLCAS, or another MOLCAS installation. Note that $molcas is only used by some COLUMBUS test jobs. Also note that $molcas does not need to point to the MOLCAS installation interfaced to COLUMBUS. |

| $orca | Should point to the same location as $ORCA, or another ORCA installation. Note that $orca is only used by some tests where ORCA is used as helper program (e.g., for setup_orca_opt.py or spin-orbit calculations with SHARC_RICC2.py). |

After the installation, it is advisable to first execute the test suite of SHARC, which will test the fundamental functionality of SHARC.

Change to an empty directory and execute

$SHARC/tests.py

The interactive script will first verify the Python installation (no message will appear if the Python installation is fine).

Subsequently, the script prompts the user to enter which tests should be executed.

The script will also ask for a number of environment variables, which are listed in Table 2.1.

There is at least one test for each of the auxiliary scripts and interfaces.

Tests whose names start with scripts_ test the functionality of the auxiliary programs in the SHARC suite.

Tests whose names start with ADF_, Analytical_, COLUMBUS_, GAUSSIAN_, LVC_, MOLCAS_ MOLPRO_, or TURBOMOLE_ run short trajectories, testing whether the main dynamics code, the interfaces, the quantum chemistry programs, and auxiliary programs ( THEODORE, WFOVERLAP, ORCA, TINKER) work together correctly.

If the installation was successful and Python is installed correctly, Analytical_overlap, LVC_overlap, and most tests named scripts_<NAME> should execute without error.

The test calculations involving the quantum chemistry programs can be used to check that SHARC can correctly call these programs and that they are installed correctly.

If any of the tests show differences between output and reference output, it is advisable to check the respective files (i.e., compare $SHARC/../tests/RESULTS/<job>/ to ./RUNNING_TESTS/<job>/). Note that small differences (different sign of values or small numerical deviations) in the output can already occur when using a different version of the quantum chemistry programs, different compilers, different libraries, or different parallization schemes.

It should be noted that along trajectories, these small changes can add up to notably influence the trajectories, but across the ensemble these small changes will likely cancel out.

2.3.4 Additional Programs

For full functionality of the SHARC suite, several additional programs are recommended (all of these programs are currently freely available, except for AMBER):

- The Python package NUMPY.Optimally, within your Python installation the NUMPY package (which provides many numerical methods, e.g., matrix diagonalization) should be available. If NUMPY is not available, some parts of the SHARC suite are still functional, and the affected scripts will fall back to use a small Fortran code (front-end for LAPACK) within the SHARC package. Since in the Python scripts no large-scale matrix calculations are carried out, there should be no significant performance loss if NUMPY is not available.

NUMPY is mandatory for dynamics with the ADF interface, with LVC models, and all pysharc functionalities. - The Python package MATPLOTLIB.If the MATPLOTLIB package, some auxiliary scripts (trajana_nma.py and trajana_essdyn.py) can automatically generate certain plots.

- The GNUPLOT plotting software.GNUPLOT is not strictly necessary, since all output files could be plotted using other plotting programs. However, a number of scripts from the SHARC suite automatically generate GNUPLOT scripts after data processing, allowing to quickly plot the results.

- A molecular visualization software able to read xyz files (e.g. MOLDEN, GABEDIT, MOLEKEL or VMD).Molecular visualization software is needed in order to animate molecular motion in the dynamics.

- The THEODORE wave function analysis suite (version 2.0 or higher).The wave function analysis package THEODORE allows to compute various descriptors of electronic wave functions (supported by some interfaces), which is helpful to follow the state characters along trajectories.

- The AMBER molecular dynamics package.AMBER can be used to prepare initial conditions based on ground state molecular dynamics simulations (instead of using a Wigner distribution), which is especially useful for large systems.

- The ORCA ab initio package.ORCA can be employed as external optimizer. In combination with the SHARC interfaces, it is possible to perform optimizations of minima, conical intersections, and crossing points for any method interfaced to SHARC.

2.3.5 Quantum Chemistry Programs

Even though SHARC comes with two interfaces for analytical potentials (and hence can be used without any quantum chemistry program), the main application of SHARC is certainly on-the-fly ab initio dynamics. Hence, one of the following interfaced quantum chemistry programs is necessary:

- MOLPRO (this release was checked against MOLPRO 2010 and 2012).

- OPENMOLCAS (this release was checked against OPENMOLCAS 22).

- TINKER, interfaced to OPENMOLCAS 22, for QM/MM dynamics.

- MOLCAS (this release was checked against MOLCAS 8.3 and 8.4).

- TINKER, interfaced to MOLCAS, for QM/MM dynamics.

- COLUMBUS 7

- COLUMBUS-MOLCAS interface for spin-orbit couplings.

- AMSTERDAM DENSITY FUNCTIONAL (only ADF 2019 or newer)

- TURBOMOLE (this release was checked against TURBOMOLE 6.6, 7.0, 7.1, 7.2, 7.3, and 7.4).

- GAUSSIAN (this release was checked against GAUSSIAN 09 and 16).

- ORCA (version 4.1, 4.2, or 5.0).

- TINKER (this release was checked against version 6.3.3) for QM/MM dynamics.

- BAGEL (commit 0ea6b59 from Mar 27, 2019 or newer).

- PyQuante for overlap calculations

See the relevant sections in chapter 6 for a description of the quantum chemical methods available with each of these programs.

Chapter 3

Execution

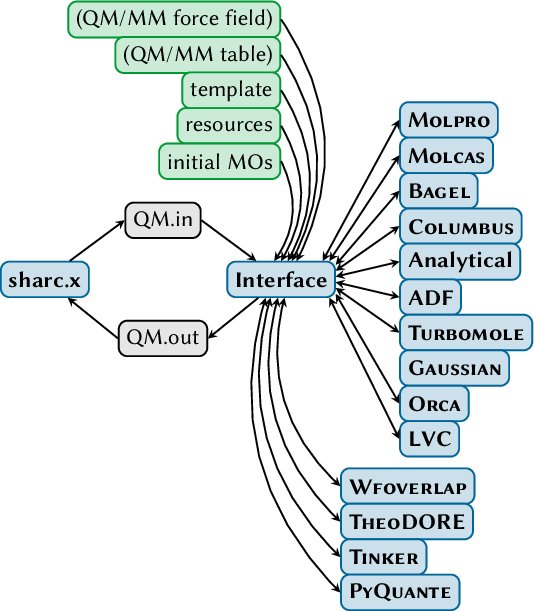

The SHARC suite consists of the main dynamics code sharc.x and a number of auxiliary programs, like setup scripts and analysis tools. Additionally, the suite comes with interfaces to several quantum chemistry software: MOLPRO, MOLCAS, COLUMBUS, TURBOMOLE, ADF, GAUSSIAN, ORCA, and BAGEL.

In the following, first it is explained how to run a single trajectory by setting up all necessary input for the dynamics code sharc.x manually. Afterwards, the usage of the auxiliary scripts is explained.

Detailed infos on the SHARC input files is given in chapter 4.

Chapter 5 documents the different output files SHARC produces.

The interfaces are described in chapter 6 and the auxiliary scripts in chapter 7.

All relevant theoretical background is given in chapter 8.

3.1 Running a single trajectory

3.1.1 Input files

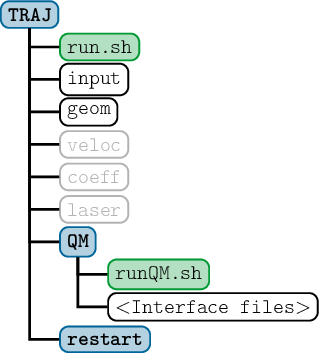

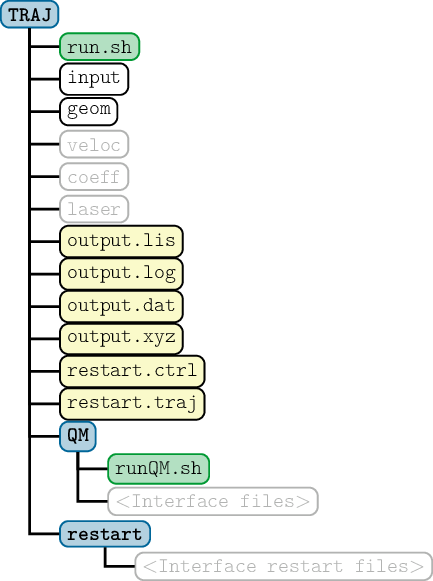

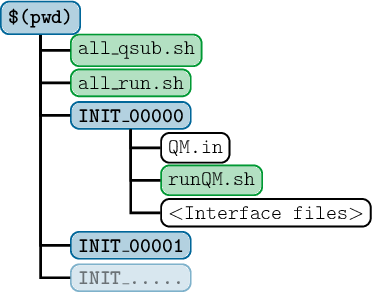

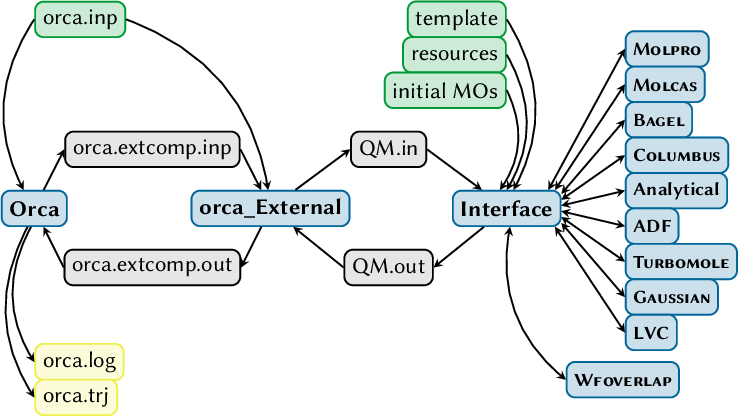

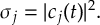

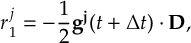

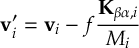

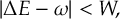

SHARC requires a number of input files, which contain the settings for the dynamics simulation (input), the initial geometry (geom), the initial velocity (veloc), the initial coefficients (coeff) and the laser field (laser). Only the first two (input, geom) are mandatory, the others are optional. The necessary files are shown in figure 3.1.

The content of the main input file is explained in detail in section 4.1, the geometry file is specified in section 4.2. The specifications of the velocity, coefficient and laser files are given in sections 4.3, 4.4 and 4.5, respectively.

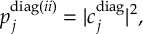

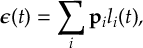

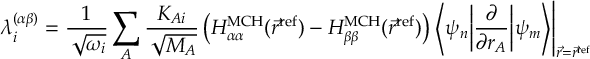

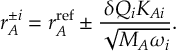

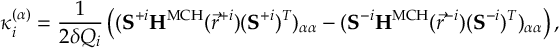

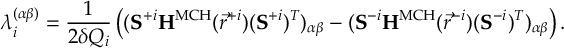

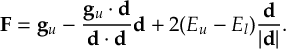

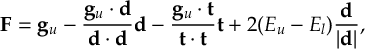

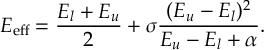

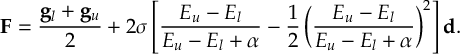

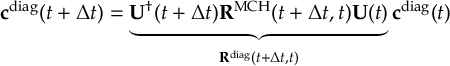

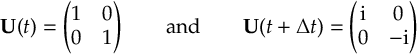

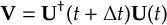

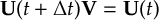

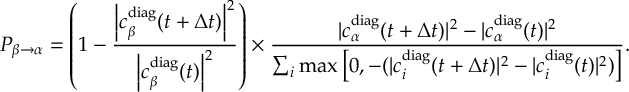

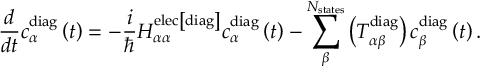

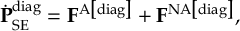

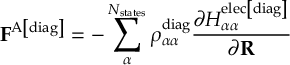

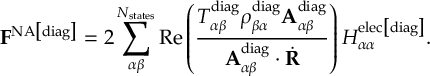

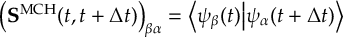

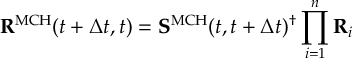

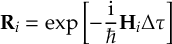

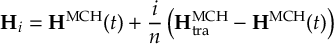

Additionally, the directory QM/ and the script QM/runQM.sh need to be present, since the on-the-fly ab initio calculations are implemented through these files. The script QM/runQM.sh is called each time SHARC performs an on-the-fly calculation of electronic properties (usually by a quantum chemistry program). In order to do so, SHARC first writes the request for the calculation to QM/QM.in, then calls QM/runQM.sh, waits for the script to finish and then reads the requested quantities from QM/QM.out. The script QM/runQM.sh is fully responsible to generate the requested results from the provided input.